Input–Output Organization

Input–Output (I/O) Organization deals with how a computer system communicates with external devices and manages data transfer between the CPU, memory, and peripherals. It includes the hardware and software mechanisms that control, synchronize, and optimize I/O operations.

Basic Idea

- I/O

organization manages communication with input, output, and storage

devices

- Handles

speed differences, data format differences, and device

control

- Uses

special interfaces and transfer techniques to improve efficiency

Examples

Input: Keyboard, Mouse, Scanner,

Microphone, Barcode reader

Output: Monitor, Printer, Speakers, Plotter

Storage: Hard disk, USB drive, SSD

Needs of I/O

Organization

CPU and memory are very fast, while

I/O devices are slow and device-dependent. Differences occur in:

- Speed

- Data

format

- Transfer

rate

- Control

method

Special I/O mechanisms are required

to manage these mismatches.

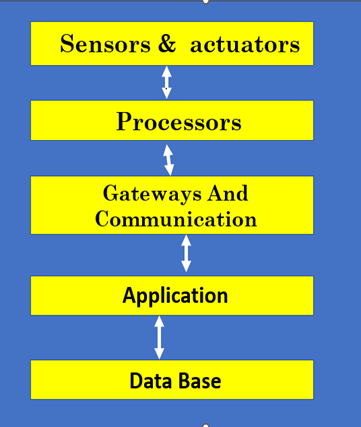

Basic I/O

Structure

Data Path:

Input Device → I/O Interface → System Bus → Memory/CPU

Output Device ← I/O Interface ← System Bus ← Memory/CPU

I/O Interface (I/O

Module)

Acts as a translator and

controller between CPU and devices.

Functions:

- Device

control & timing

- Data

buffering

- Error

detection

- Signal

conversion

- Status

reporting

Modes of I/O Data Transfer

1.

Programmed

I/O (Polling)

- CPU

continuously checks the device status

- Simple

but wastes CPU time

- Suitable

for simple/slow systems

2.

Interrupt-Driven

I/O

- Device

sends interrupt when ready

- CPU

services device via ISR

- Better

CPU utilization

- Some

interrupt overhead

3.

Direct

Memory Access (DMA)

- Data

moves directly between device and memory

- CPU

only initializes transfer

- Very

fast; best for large data blocks

- Needs

extra hardware

I/O Addressing

Methods

Memory-Mapped I/O

- Devices

use memory addresses

- Same

instructions as memory access

- Easier

programming

Isolated (Port-Mapped) I/O

- Separate

I/O address space

- Special

IN/OUT instructions used

I/O Buffering

Temporary storage during transfer

to handle speed mismatch.

Types:

- Single

buffer — one temporary area

- Double

buffer — parallel fill & transfer

- Circular

buffer — continuous streaming data

I/O Controllers

and Channels

- I/O

Controller:

Controls and monitors devices

- I/O

Channel: An advanced controller that executes I/O tasks independently

Method Comparison

|

Method |

CPU Use |

Speed |

Cost |

|

Programmed I/O |

High |

Slow |

Low |

|

Interrupt I/O |

Medium |

Better |

Medium |

|

DMA |

Low |

Fast |

High |

*********************************************************

Peripheral

Devices

Peripheral devices are external hardware components

connected to a computer system. They are not part of the CPU but operate under

CPU control through I/O interfaces and controllers. They support data

input, result output, storage, and communication.

Basic Computer

System Flow with Peripherals

Input Devices → I/O Controller →

CPU (ALU, CU, Registers) → Main Memory → Output Devices

Classification of

Peripheral Devices

1. Input Devices

- Used

to enter data and instructions.

- Examples:

Keyboard, Mouse, Scanner, Microphone.

- Convert

user actions or physical signals into digital data for CPU.

2. Output Devices

- Display

or produce processed results.

- Examples:

Monitor, Printer, Speakers.

- Convert

digital results into visual, printed, or audio form.

3. Storage Devices (Secondary

Storage)

- Provide

permanent data storage.

- Types:

- Magnetic:

Hard disk, Tape

- Optical:

CD, DVD, Blu-ray

- Solid-state:

SSD, Pen drive, Memory card

4. Communication Devices

- Enable

data transfer between computers.

- Examples:

Modem, Network Interface Card (NIC).

- Support

wired and wireless networking.

Peripheral Device

Controllers

Since peripherals work at different

speeds, controllers are used between device and CPU.

Functions:

- Data

buffering

- Error

detection

- Device

control

- Speed

matching

Device ↔ Controller ↔ CPU

Data Transfer

Methods

- Programmed

I/O: CPU

continuously checks device (slow, CPU busy).

- Interrupt-Driven

I/O: Device

interrupts CPU when ready (more efficient).

- DMA

(Direct Memory Access):

Data transfers directly between device and memory without CPU (fastest).

Input vs Output

Devices (Difference)

- Input

devices send data to CPU (User → Computer).

- Output

devices receive data from CPU (Computer → User).

Advantages

- Extend

system capability

- Improve

user interaction

- Enable

storage and communication

Limitations

- Slower

than CPU

- Need

device drivers

- Require

interfaces like USB, HDMI, SATA

************************************************************

I/O

Interface (Input/Output Interface)

I/O Interface is a hardware module placed

between the CPU and peripheral devices to enable smooth data transfer by

resolving differences in speed, data format, and control signals.

Need of I/O

Interface

- Resolves

speed mismatch between fast CPU and slow I/O devices

- Handles

data format mismatch (binary vs serial/parallel formats)

- Manages

control and timing differences

- Performs

device selection and identification

- Provides

data buffering using temporary storage

Main Components of

I/O Interface

- Address

Decoder –

Identifies and selects the I/O device

- Data

Register –

Stores data during transfer

- Control

Register –

Holds commands like READ/WRITE/START/STOP

- Status

Register –

Shows device status (Ready, Busy, Error)

- Buffer – Temporarily stores data to

manage speed differences

Types of I/O

Interfaces

1. Serial Interface

- Transfers

1 bit at a time

- Slower

but cheaper

- Suitable

for long-distance communication

2. Parallel Interface

- Transfers

multiple bits simultaneously

- Faster

- Used

where speed is important

I/O Data Transfer

Methods

1. Programmed I/O

- CPU

continuously checks device status (busy waiting)

- Simple

but wastes CPU time

2. Interrupt-Driven I/O

- Device

interrupts CPU when ready

- Better

CPU utilization

- Has

interrupt handling overhead

3. Direct Memory Access (DMA)

- DMA

controller transfers data between device and memory

- Very

fast

- Requires

complex hardware

Isolated I/O vs

Memory-Mapped I/O

|

Feature |

Isolated I/O |

Memory-Mapped I/O |

|

Address Space |

Separate |

Common |

|

Instructions |

Special I/O instructions |

Normal memory instructions |

Handshaking

- Technique

to synchronize CPU and device during transfer

- Uses

signals like READY and ACK to confirm data exchange

Advantages of I/O

Interface

- Ensures

reliable data transfer

- Hides

device-level complexity from CPU

- Supports

multiple devices

- Improves

overall system performance

*******************************************************************

Asynchronous

Data Transfer

Asynchronous data transfer is a method of communication

between digital components that does not use a common clock. Instead of

clock pulses, it uses control signals (handshaking) to coordinate when

data is sent and received. The sender and receiver agree on data validity and

acceptance through signal exchange.

Needs:-

- When

sender and receiver run at different speeds or independent clocks

- Interfacing

with external peripherals (keyboard, sensors, serial devices)

- Low-power

and low-latency

systems

- Large

systems where avoiding clock skew and EMI is important

Basic Signals

- DATA – carries the information

bits

- REQ

(Request) –

sender signals that data is ready

- ACK

(Acknowledge)

– receiver signals data accepted

- Strobe/Valid – single signal sometimes

used to indicate valid data

Timing terms: setup time, hold time, and

propagation delay must be considered, especially when crossing clocked domains.

Handshake

Protocols

1. Four-Phase (Two-Wire REQ/ACK)

Handshake

Each transfer completes in four

steps:

- Sender

puts data and sets REQ = 1

- Receiver

reads data and sets ACK = 1

- Sender

resets REQ = 0

- Receiver

resets ACK = 0

Features: Simple, reliable, widely used.

2. Two-Phase (Transition) Handshake

- Uses

signal transitions (edges) instead of signal levels

- Each

transfer uses only two transitions

- Faster

and lower power

- Design

is more complex

Muller C-Element

- Basic

asynchronous circuit component

- Output

= 1 if all inputs are 1

- Output

= 0 if all inputs are 0

- Otherwise,

output holds previous value

- Used

for handshake completion detection and event synchronization

Metastability & Synchronizers

- Occurs

when an asynchronous signal enters a clocked flip-flop and becomes

temporarily unstable

- Can

cause logic errors

Prevention methods:

- Use

2-stage or 3-stage synchronizer flip-flops

- Design

for higher MTBF (Mean Time Between Failures)

Advantages

- No

global clock required

- Reduced

clock skew problems

- Lower

dynamic power

- Suitable

for variable-latency and peripheral interfaces

Disadvantages

- More

complex design and verification

- Harder

testing and debugging

- Metastability

risk at clock boundaries

- Less

tool and synthesis support compared to synchronous systems

**************************************************************************

Modes

of Data Transfer

Data Transfer

Data transfer means moving data

between:

- CPU

↔ Memory

- CPU

↔ I/O Devices

- Memory

↔ I/O Devices

Since I/O devices are slower than

the CPU, special transfer modes are used for efficient communication.

Classification of

Data Transfer Modes

- Programmed

I/O (Polling)

- Interrupt-Driven

I/O

- Direct

Memory Access (DMA)

1. Programmed I/O

(Polling)

Concept:

CPU controls the entire transfer and continuously checks whether the device is

ready.

Working (Short Steps):

- CPU

sends I/O command

- CPU

repeatedly checks device status (polling)

- When

ready → CPU transfers data

- CPU

resumes execution

Key Point: CPU waits actively (busy waiting).

Advantages:

- Simple

method

- No

extra hardware needed

Disadvantages:

- Wastes

CPU time

- Inefficient

for slow devices

Best For: Simple and low-speed devices.

2.

Interrupt-Driven I/O

Concept:

Device notifies CPU using an

interrupt signal when it is ready — CPU does not keep checking.

Working (Short Steps):

- CPU

issues I/O command

- CPU

performs other tasks

- Device

sends interrupt when ready

- CPU

runs Interrupt Service Routine (ISR)

- Data

transfer occurs

- CPU

resumes previous task

Key Point: Event-driven transfer.

Advantages:

- Better

CPU utilization

- No

busy waiting

Disadvantages:

- Interrupt

handling overhead

- Slower

than DMA for large data blocks

Best For: Medium-speed devices and

asynchronous events.

3. Direct Memory

Access (DMA)

Concept:

A DMA controller transfers data directly between I/O and memory without

continuous CPU involvement.

Working (Short Steps):

- CPU

initializes DMA (address, count, direction, device)

- DMA

requests system bus

- CPU

grants bus

- DMA

transfers data directly (I/O ↔ Memory)

- DMA

interrupts CPU after completion

DMA Transfer

Modes:

- Burst

Mode – Entire

block at once

- Cycle

Stealing –

One word per cycle

- Transparent Mode – Uses only idle CPU cycles

Advantages:- Very

fast block transfer

- CPU

free during transfer

- Highest

efficiency

Disadvantages:

- Complex

hardware

- Higher

cost

Best For: Large, high-speed data transfers.

Quick Comparison

Feature

Programmed I/O

Interrupt I/O

DMA

CPU involvement

High

Medium

Low

CPU waiting

Yes

No

No

Transfer path

Via CPU

Via CPU

Direct Memory ↔ I/O

Efficiency

Low

Moderate

High

Hardware need

Simple

Moderate

Complex

Use case

Simple devices

Event devices

Bulk transfer

**************************************************************************

Priority Interrupt

Interrupt is a signal from an I/O device or internal event that temporarily stops normal CPU execution and transfers control to an Interrupt Service Routine (ISR). Interrupts improve CPU utilization because I/O devices are much slower than the CPU, and polling wastes time.

Priority Interrupt — Definition

A priority interrupt system assigns a priority level to each interrupt source. When multiple interrupts occur at the same time, the CPU services the highest-priority request first.

Needs

- Multiple

I/O devices may request service simultaneously

- Some

events are time-critical (like power failure)

- Without

priority:

- Critical

tasks may be delayed

- System

reliability decreases

Example priority order: Power failure > Disk I/O > Keyboard > Printer

Basic Working Principle

- Each

interrupt line has a priority level

- All

interrupt requests are checked

- Highest

priority interrupt is selected

- Lower

priority interrupts are temporarily masked

- CPU

saves current state → runs ISR → restores state → resumes program

Higher-priority interrupts can preempt lower-priority ISR execution.

Types of Priority Interrupts

1. Hardware Priority Interrupt

Priority is decided by hardware circuits. It is fast and used in real-time systems.

Common Methods:

Daisy Chaining

- Devices

connected in series

- Priority

based on physical position

- First

requesting device captures acknowledge signal

- ✔ Simple, low cost

- ✖ Fixed priority, slower for low-priority devices

Priority Encoder

- Uses

encoder circuit to select highest request

- Produces

binary code of highest priority interrupt

- ✔ Faster, flexible, efficient

- ✖ More hardware complexity and cost

- ✖ Possible starvation of low-priority devices

2. Software Priority Interrupt (Polling)

Priority is decided by software.

Working:

- CPU

jumps to a common ISR

- ISR

checks device flags one by one (if-else order)

- First

active high-priority device is serviced

✔ Simple design

✖ Slower due to continuous checking

Priority vs Non-Priority Interrupt

Feature

Priority

Non-Priority

Service order

Based on priority

First come

Critical handling

Yes

No

Complexity

Higher

Lower

Efficiency

Higher

Lower

Applications

- Operating

systems

- Embedded

systems

- Real-time

control

- Industrial

automation

- Communication

systems

************************************************************************

Direct Memory Access (DMA)

Definition:

Direct Memory Access (DMA) is a data transfer technique in which an I/O device transfers data directly to/from main memory without continuous CPU involvement. The CPU only initializes the transfer and then performs other tasks.

Need for DMA

DMA is used to overcome limitations of programmed and interrupt-driven I/O:

- Enables

high-speed data transfer

- Reduces

CPU overhead

- Improves

overall system performance

DMA Controller – Main Components

1. Address Register

- Stores

starting memory address

- Acts

as a pointer to current transfer location

- Automatically

increments/decrements after each transfer

- Types:

Base Address Register (BAR), Current Address Register (CAR)

2. Word Count Register

- Stores

number of bytes/words to transfer

- Decrements

after each transfer

- When

it becomes zero → transfer complete signal (Terminal Count)

- Often

16-bit (up to 65,536 units)

3. Control Register

- Specifies

transfer type (Read/Write)

- Selects

transfer mode (burst, cycle stealing, etc.)

- Enables/disables

interrupt

- Contains

status/control flags

4. Status Register

- Indicates

completion or error condition

- Sets

“Done” flag after transfer

Working of DMA (Steps)

- Very

fast block transfer

- CPU

loads DMA registers with:

- Starting

address

- Word

count

- Transfer

type

- I/O

device sends DMA request (DRQ)

- DMA

controller requests system bus

- CPU

grants bus and becomes idle

- DMA

transfers data between memory and I/O device

- On

completion → DMA sends interrupt to CPU

- CPU

resumes normal execution

DMA Transfer Modes

1. Burst Mode (Block Transfer)

- Transfers

entire block at once

- CPU

inactive during transfer

- Fastest

mode

- Best

for large data (disk transfer)

2. Cycle Stealing Mode

- Transfers

one word per cycle

- DMA

“steals” bus briefly

- CPU

loses only one cycle each time

- Balanced

CPU + DMA operation

3. Transparent Mode

- DMA

transfers only when CPU is idle

- No

CPU interruption

- Slowest

but safest mode

DMA vs Programmed I/O

Feature

Programmed I/O

DMA

CPU involvement

High

Very Low

Speed

Slow

Fast

CPU utilization

Poor

Efficient

Transfer size

Byte-by-byte

Block

Advantages of DMA

- High-speed

transfer

- Frees

CPU for other work

- Better

throughput

- Efficient

for large data blocks

- Lower

overhead and better timing

Disadvantages of DMA

- Complex

hardware

- Bus

contention with CPU

- Harder

debugging

- Not

economical for small transfers

- Mode

selection trade-offs

Applications of DMA

- Hard

disks / SSD controllers

- Graphics

cards

- Sound

cards

- Network

interface cards

- Real-time

systems

**********************************************************************

I/O Processor (IOP)

Definition:

An I/O Processor (IOP) is a special-purpose processor that controls and manages input/output operations independently of the main CPU. Its main goal is to reduce CPU workload and improve overall system performance.

Needs

- In

early systems, the CPU handled:

- Program

execution

- Data

transfer

- I/O

device control

- This

caused CPU time wastage and slow performance.

- Solution: Use a separate I/O Processor

to handle I/O tasks.

Main Functions of IOP

- Controls

I/O devices

- Transfers

data between devices and main memory

- Executes

I/O instructions/programs

- Handles

interrupts and I/O errors

- Reports

completion status to CPU

- Performs

buffering, error checking, and data conversion

Key Characteristics

- Handles

I/O tasks independently

- Reduces

CPU load

- Works

concurrently with CPU

- Can

execute its own I/O instructions

- Supports

Direct Memory Access (DMA)

- Can

work in master/slave mode (CPU initiates, IOP executes)

Working of IOP (Steps)

- CPU

sends I/O command to IOP

- CPU

continues other work

- IOP

selects device and manages transfer

- Data

moves directly between device and memory

- After

completion, IOP sends interrupt to CPU

➡ CPU involvement is minimal

IOP vs CPU (Difference)

- CPU: Executes main programs,

complex instruction set, very high speed

- IOP: Manages I/O, simpler

instruction set, moderate speed, specialized work

IOP vs DMA Controller

- IOP: Intelligent, executes

programs, full device control, very low CPU involvement, costly

- DMA: Less intelligent, no program

execution, limited control, partial CPU involvement, cheaper

Advantages

- Reduces

CPU load

- Enables

parallel processing

- Improves

system throughput

- Handles

multiple I/O devices efficiently

- Suitable

for high-speed systems

Disadvantages

- High

hardware cost

- Complex

design

- Not

suitable for small systems

Applications

- Mainframe

computers

- Supercomputers

- High-performance

servers

- Real-time

systems

- Industrial

automation systems

*************************************************************************

- Transfers

entire block at once

0 Comments